Using SPARKPLUS

Assessment of Team work, group work and collaborative learning

Team-based assessment projects and group work are often used to develop team and collaborative skills and crucial graduate attributes. As with any learning activity the first step is to ensure that students understand the objective, intended learning outcomes and requirements to demonstrate their learning outcome achievement.

Team/Group work (professional) skills are best learnt in authentic contexts where there are assessment consequences and feedback for both individual and team actions and outcomes. Yet in many team/group work opportunities only the artefact the team produces and not the team skills are assessed. This practice means many dysfunctional and/or poor teams can achieve a high grade without demonstrating any level of teamwork skill achievement.

Both academics and students expressed concerns about teamwork activities. Many academics seem teamwork assessments as not being rigorous, as they can’t be sure who did the work, that the contribution of team members can be difficult to evaluate and issues in how to deal with free riding students who have made little or no contribution.

Conversely, students commonly complain about group work being unfair with their major complaints being free riders, poorly performing students, receiving equal marks for unequal contributions and high contributing students often feel inadequately rewarded for their efforts.

Since assessment strongly influences learning, any course with an objective to improve team work, peer learning and/or collaboration must have an assessment regime that rewards demonstrated achievement (conversely, has meaningful consequences for non-achievement).

It is often difficult, if not impossible, for an academic to fairly assess the contribution of individual students to a team project since most of the work occurs outside of scheduled contact hours. Accordingly, grading the contribution of individuals to a team task is best handed over to the team members themselves since they have the most relevant information.

Self and Peer Assessment

Self and peer assessment provides a solution for both achieving the above mentioned objectives and overcoming potential inequities of equal marks for unequal contributions. Group members are responsible for negotiating and managing the balance of contributions and then assessing whether the balance has been achieved.

SPARKPLUS facilitated self and peer assessment promotes academic honesty (features in SPARKPLUS highlight dishonest assessments), increases student’s motivation (consequences for not contributing, poor teamwork skills and/or not delivering their assigned tasks at the agreed standard) and models authentic professional practice (nearly every professional has an annual performance review where evidence of their contribution and performance is discussed and assessed).

SPARKPLUS Group Contribution Mode

SPARKPLUS is a web-based self and peer assessment tool that enables students to confidentially rate their own and their peers' contributions to a team task.

Being a criteria-based tool SPARKPLUS allows academics the flexibility to choose or create specifically targeted criteria to allow any task or attribute demonstration to be assessed. In addition, it facilitates the use of common categories, to which academics may link their chosen criteria, providing a means for both academics and students to track students’ development as they progress through their degree.

In addition, SPARKPLUS facilitates students providing anonymous written feedback to their peers to support their ratings and to provide comments to support their ratings of themself. It also provides a number of options for graphically reporting results and automates data collection, collation, calculation and distribution of feedback and results.

SPARKPLUS is extremely powerful. The information provided here is to introduce you to SPARKPLUS and get you started. For users wanting more training, the SPARKPLUS team run workshops to increase user expertise and improve assessment task design, scaffolding, students’ learning and their learning experience. For more information contact SPARKPLUS Admin

Assessment Factors

SPARKPLUS produces two assessment factors:

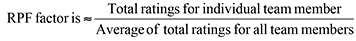

1. The Relative Performance factor (RPF) is a weighting factor determined by both the self and peer rating of a student’s contribution (Note: there are several different RPF algorithms, the formula below shows the indicative relationship).

The RPF is used to change a team mark for an assessment task into an individual mark as shown below:

Individual mark = team mark * individual’s RPF

For example, if a group receives 80/100 for their project and a student in that group receives a RPF factor of 0.9 for their contribution (reflecting a lower-than-average team contribution), the student will receive an individual mark of 72.

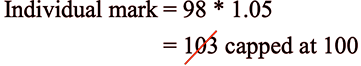

The maximum mark an individual can achieve for an assessment will be capped at 100% reflecting the maximum available mark for demonstrating the associated learning outcome achievement. For example, if a group receives 98/100 for their project and a student in that group receives a RPF factor of 1.05 for their contribution (reflecting a higher-than-average team contribution), the student will receive an individual mark of 100.

Typically, RPF factors between 0.97 and 1.02 reflect that your contribution was in line with the average contribution for your team.

2. The Self Assessment to Peer Assessment (SA/PA) factor is the ratio of a student’s own rating of themselves compared to the average rating of their contribution by their peers.

The SA/PA factor has strong feedback value for development of critical reflection and evaluation skills. A SA/PA factor greater than 1 means that a student has rated their own performance higher than the average rating they received from their peers and vice versa.

Typically, SA/PA factors between 0.95 and 1.05 reflect that your assessment of your contribution is roughly in agreement with the average assessment of your contribution by your team.

SA/PA factors above 1.08 reflect that a student rated their contribution to the team activity as being significantly more than the average rating of their contribution by their team peers. Conversely, SA/PA factors below 0.93 reflects that a student rated their contribution to the team activity as being significantly less than the average rating of their contribution by their team peers.

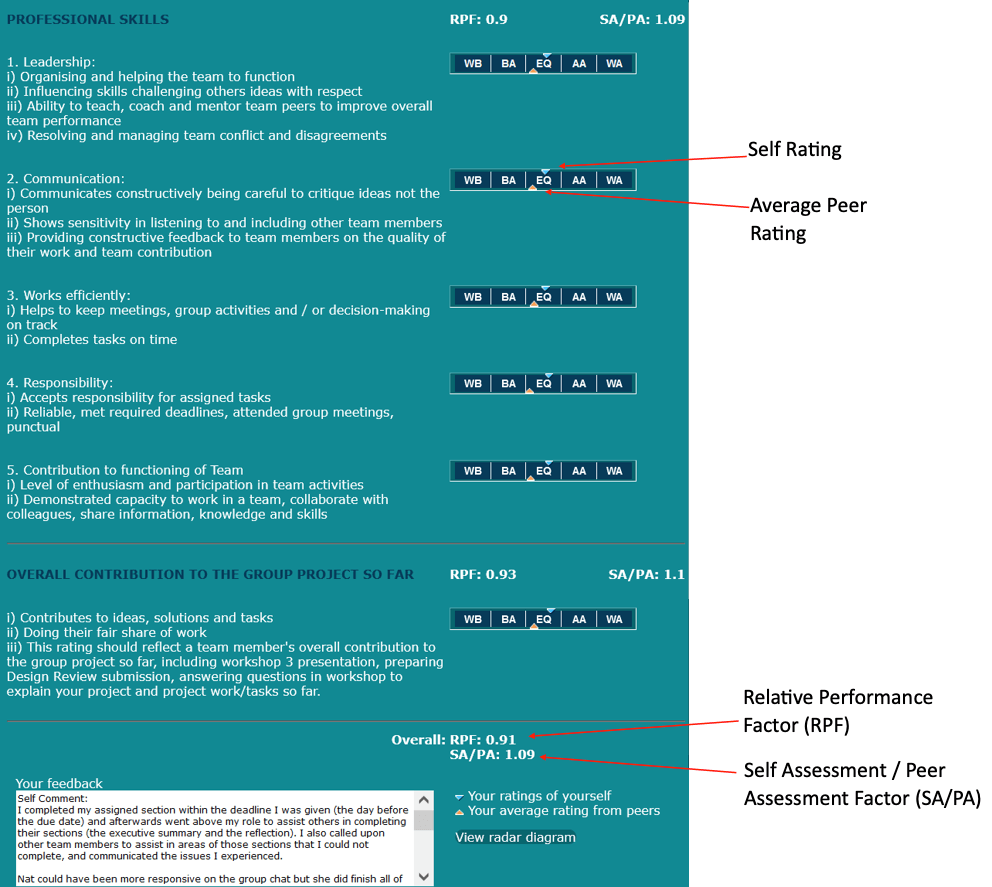

SPARKPLUS Results Screen

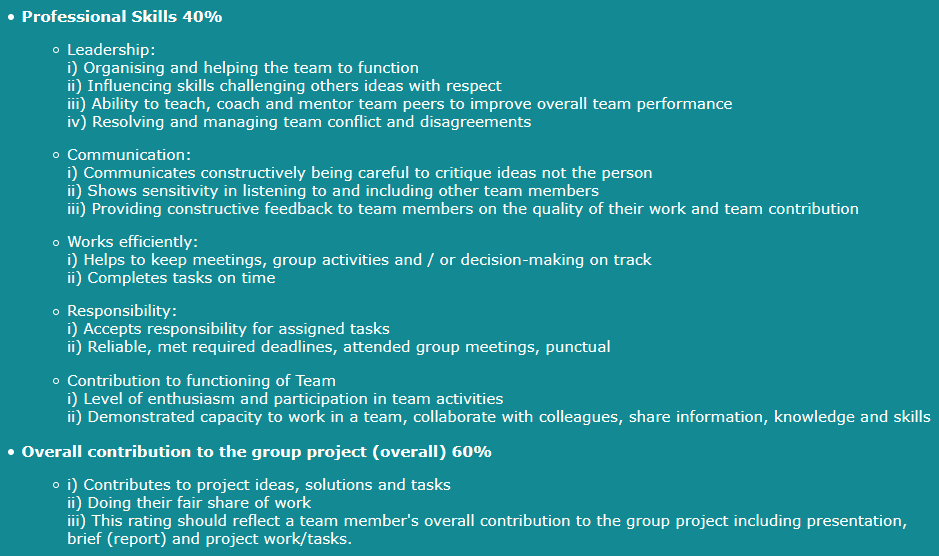

The results screen will display student’s Relative Performance Factor (RPF) and Feedback Factor (SA/PA) for each category (in the screenshot below, Professional Skills and Contribution to Project tasks) and their overall performance and feedback factors (bottom of screen). Note: the contribution of different categories to the overall factors can be weighted (in the screenshot below professional skills was weighted at 40% and contribution to project task was weighted at 60%).

The sliders provide a comparison of a student’ s self-rating of their own contribution (blue triangle on the top of slider) to the average rating they received from their team peers (orange triangle on the bottom of slider) for each criterion. The student whose results are shown in the above figure rated their performance higher than the average rating they received from their peers (the blue triangle reflecting their self-rating is higher than the orange triangle reflecting the average rating they received from their team peers).

The text box displays the self-comments the student provided to support their ratings of themself and the anonymous feedback comments about their team contribution and performance from their team peers. In this case you can see that the comments indicate some reservations about the student’s contribution to the project (could have been more responsive on the group chat).

Setting Criteria and feedback prompts

Setting criteria is not a trivial task. Instructors should thoughtfully select their assessment criteria to promote the desired team behaviours, skill development and knowledge associated with the project learning outcomes. For example, setting a criterion (see prompting statement under leadership heading in the criteria screenshot below) resolving and managing team conflicts and disagreements means that students know they are going to be assessed on their conflict resolution skills and thus will be mindful of exercising, demonstrating and hence developing these skills when they are undertaking their project.

To assist instructors to set criteria, SPARKPLUS provides some selectable recommended criteria.

Using Student Feedback Prompt to promote critical evaluation and higher quality targeted feedback

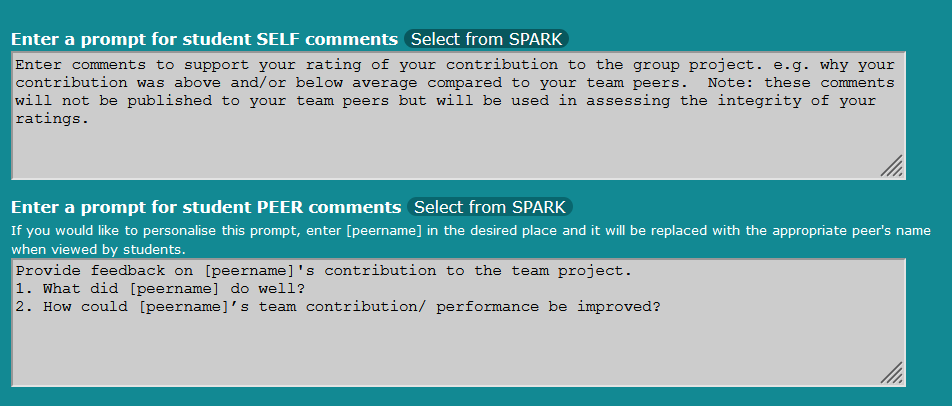

In the group contribution mode SPARKPLUS allows the academic to enter instructions to prompt students to reflect and be specific when providing their comments to support their self-rating and ratings for their team peers. Using the peer name feature personalises the feedback prompt. I have found that using a well-designed prompt promotes both critical evaluation and higher quality feedback that targets the behaviours skills and attributes you want students to develop.

The feedback prompt is entered in the text boxes below where you enter assessment criteria. You can also choose from a range of SPARKPLUS suggested feedback prompts.

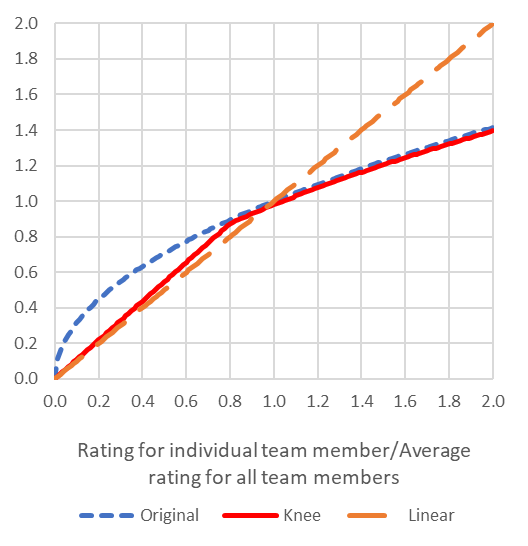

RPF Formulae

We do not mark in a linear fashion. The quality of a submission awarded a mark of 90/100 may be 4 to 5 times the quality of a submission awarded a mark of 60/100. Hence while the quality increased by 400% to 500% the awarded mark only increased by 50%.

Similarly, a report awarded a 55/100 may be 15% less quality than a report awarded 60/100 but the mark only decreases by 8%.

The SPARKPLUS RPF formulas have been derived after analysing years of assessment data to both improve the fairness of RPF calculations and reflect the nonlinear relationship between assessment quality and awarded marks.

The figure below shows the relationship for each of the three available calculation formulae (two RPF and one Demonstrated Achievement).

The following text provides a brief description of the circumstances for which it is recommended each formula is used:

Original

Consider using in first year classes when students are still developing their judgement or when students and instructors are using SPARKPLUS for the first time or are familiarising themselves with the concept of using self and peer assessment to determine team members contribution to a group project. The original formula reduces the impact resulting from unfair ratings while fairly rewarding above average performing students.

Knee

Recommended in senior classes or when students and instructors are already familiar with SPARKPLUS. The knee formula doesn’t overly reward underperforming students, while fairly rewarding above average performing students.

Linear

Only recommended when an assessment and/or quality scale is being used (eg poor to excellent, underachieving to overachieving, Fail to High Distinction and forced norm assessment is not used) to assess a performance score reflecting demonstrated achievement and the average mark and not the RPF is published. The linear formula should not be used when the RPF will be used to change group marks into individual marks or the rating scale requires students to rate their relative contribution to their peers (for example using a norm reference scales eg: Below Average contribution to Above Average contribution).

Non-norm rated assessment

Users may choose to use RPF’s with the original or knee formula with an assessment and/or quality scale (eg poor to excellent, underachieving to overachieving, Fail to High Distinction). In this case force norm assessment should not be used. This allows the student to receive both an actual and not a relative performance rating and an RPF to convert their group mark into an individual mark. If you choose this option, it is recommended that you provide prompting criteria and undertake a benchmarking activity so students are making their judgements using a shared understanding of the level of achievement needed to be demonstrated for each step in the rating scale.

Using the monitoring tool to efficiently monitor and provide feedback to students and teams, even in large classes.

The monitoring tool is a unique and extremely powerful feature of SPARKPLUS. It enables instructors to monitor the performance of their subject’s teams and provide feedback. It also enables instructed to detect and take action when encountering suspected cases of academic dishonesty (students providing dishonest assessments), student free riders, saboteurs, and dysfunctional teams.

The best way to discover the power of the monitoring tool is to use it. To get you started it is recommended that you watch the series of relevant videos.

Monitoring Teams

Identify Students Performance Level and Early Intervention

Detecting, Moderating and Intervention Exclusions

Potential Non-Contributors

Group Filters and Other Functionality

Radar Diagrams

Students can also view the self and peer assessment results by viewing their individual and/or group radar diagram.

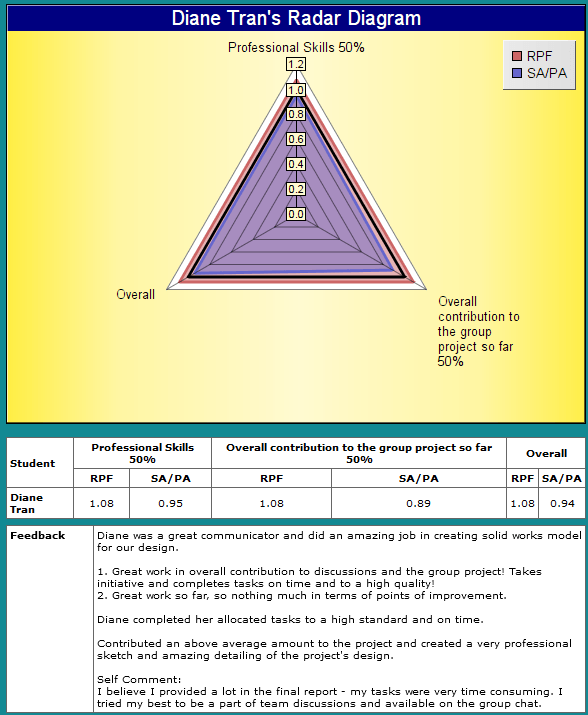

Individual Radar Diagram

The individual radar diagram (shown below) graphically displays a student’s category and overall performance (RPF) and feedback (SA/PA) factors. It also includes a table that reports your factors and the feedback comments you received from your peers. It is envisaged that as a student progresses through their degree these diagrams may be used as evidence of their level of professional competency development.

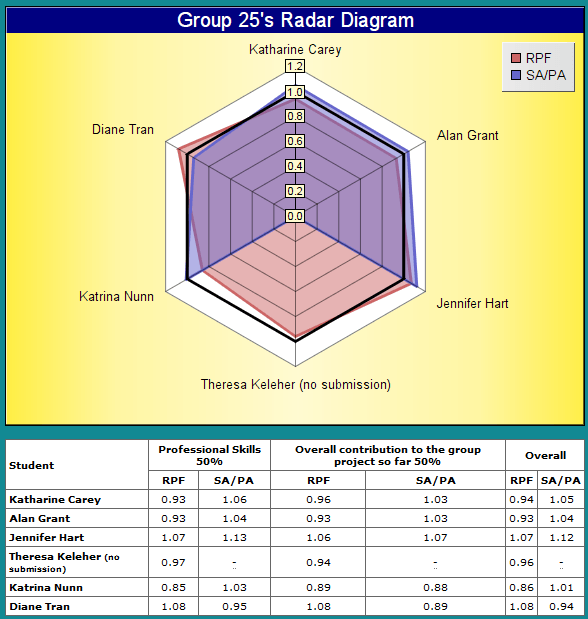

Group radar diagram

The group radar diagram graphically shows the overall performance and feedback factors for the whole team. It also includes a table that reports each team member’s factors. The group radar diagram allows a student to compare their performance to their team peers.

Dealing with Objections

I recommend that the RPF and SA/PA factors are initially released as preliminary, only becoming official after any objections are considered.

To manage any student objections, I use the SPARKPLUS Objection to Group Contribution Rating and/or Awarded Grade mode. The selectable criteria provided within SPARKPLUS can be modify/edited as required to suit your particular circumstances. Before lodging an objection, students are required to both reflect on the results and discuss them with their team members. Using this process, we receive few if any objections of which virtually none are trivial or unfounded.

Collaborative Learning Using Multiple-Choice Questions

When well-designed Multiple-choice questions (MCQs) are an extremely powerful learning tool. They can be particularly effective when used in a collaborative environment when teams work together to answer questions.

I have found MCQs to be more effective when students first attempt the questions on their own, as pre work, before their class/workshop/tutorial where they join with their group peers to answer questions collaboratively. It is during these conversations that students share their understanding and explore their differences in knowledge and perspective. These conversations enable students to both identify and clearly articulate what they do and don’t yet understand.

Instructors should take the opportunity to walk around and listen to students as they debate their answers to the questions. The insights they gain will allow instructors to both understand and subsequently address the common misperceptions/difficulties that students are encountering.

The benefits of MCQs are enhanced when multiple attempts with immediate feedback for incorrect answers is provided. I have found this format, pioneered by Dr Larry Michaelson and implemented in the form of IFAT cards, to enhance learning through promoting more active participation.

Another powerful variation is to have students eliminate the incorrect answers rather than identify the correct answer (see video MCQ eliminating correct answers).

The following three videos will provide you with a brief introduction of how to use collaborative MCQs as a learning tool in your classes.